Tongyi Qianqian Qwen downloads exceeded 40 million, “spawning” more than 50,000 children Models.

No BS let first test Qwen here:

Cloud computing “Spring Festival Gala” Yunqi Conference opened, Tongyi Qwen big model once again exploded the whole scene!

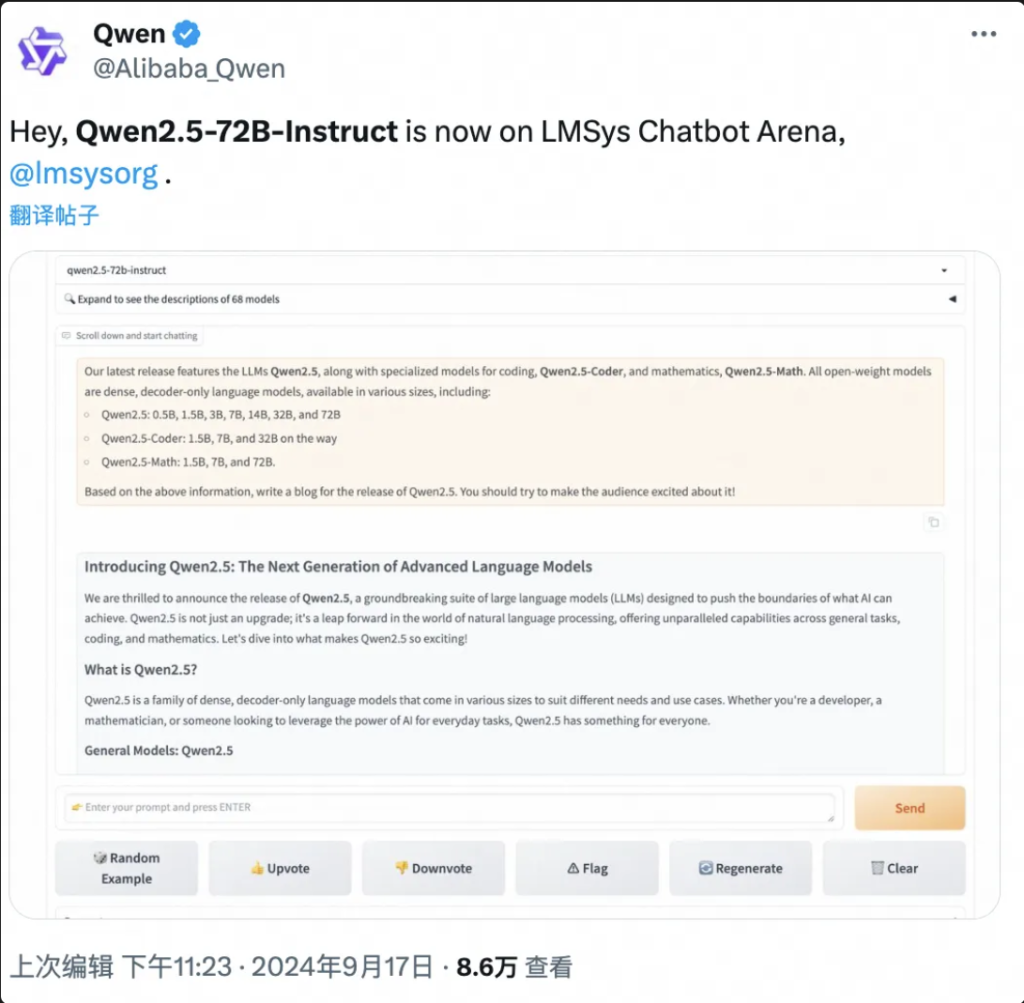

Wise things reported on September 19, Hangzhou, today, Ali Cloud launched the world’s strongest open source large model Qwen2.5-72B, performance “cross-measure” beyond Llama3.1-405B, and then on the throne of the global open source large model.

The following is a summary of the results of the work on this project

Qwen2.5-72B outperforms Llama-405B in many authoritative tests.

At the same time, a large series of Qwen2.5 models were then open-sourced, including: language model Qwen2.5, visual language model Qwen2-VL-72B, programming model Qwen2.5-Coder, and mathematical model Qwen2.5-Math, etc., with a cumulative total of more than 100 on the shelves, and some of them catching up with the performance of the GPT-4o to set a new world record.

“It’s Crazy Thursday”, ‘Epic Product’ …… In the few hours since its release, Qwen2.5 has set off a frenzy of discussion on social media at home and abroad, and developers around the world have been joined the trial test team.

▲Qwen2.5 is a hot topic on social media at home and abroad.

Qwen2.5-Math, for example, combines visual recognition with Qwen2.5-Math. When inputting a screenshot of a geometrically similar multiple-choice question, Qwen2.5-Math quickly recognizes the meaning of the question and gives the correct solution and answer “B”, which is both accurate and fast. The accuracy and speed are amazing.

This is the first time I’ve seen Qwen2.5-Math on the web.

▲Qwen2.5-Math Trial

In just one and a half years since April 2023, Qwen has grown into a world-class modeling group second only to Llama.

According to the latest data released by Zhou Jingren, CTO of AliCloud, as of mid-September 2024, the cumulative number of downloads of open source models of Tongyi Qwen has exceeded 40 million, and more than 50,000 large models have been derived.

▲Qwen series derived large models over 50,000

What are the specific performance improvements of Qwen 2.5? What are the highlights of the 100 new open source models? The Qwen2.5 is the first of its kind in the world, and it is the first of its kind in China.

Blog address: https://qwenlm.github.io/blog/qwen2.5/

Project address: https://huggingface.co/spaces/Qwen/Qwen2.5

▲Official Blog Releases Aliyun Qwen 2.5 Model Clusters

01.Topping the global list again, Qwen2.5 cross-volume Catching up with Llama3.1-405B

Let’s take a look at the performance of Qwen2.5 specifically.

Qwen2.5 model supports up to 128K context length, can generate up to 8K content, and supports more than 29 languages, which means that it can help users to write 10,000-word articles.

Not only that, based on 18T token data pre-training, Qwen2.5 has more than 18% overall performance improvement compared to Qwen2, with more knowledge and stronger programming and math skills.

▲AliCloud CTO Zhou Jingren explaining Qwen2.5

It is reported that the flagship model, Qwen2.5-72B model, scored as high as 86.8, 88.2, and 83.1 on the MMLU-rudex benchmark (which examines general knowledge), the MBPP benchmark (which examines coding ability), and the MATH benchmark (which examines math ability).

Qwen2.5, with its 72 billion parameters, even outperforms Llama3.1-405B, with its 405 billion parameters, “by orders of magnitude”.

Llama3.1-405B was released by Meta in July 2024, and in more than 150 benchmark test sets, it equaled or even surpassed the then SOTA (best in the industry) model GPT-4o, triggering the assertion that “the strongest open-source model is the strongest model”.

Qwen2.5-72B-Instruct, the follow-on version of Qwen2.5, surpassed Llama3.1-405B in MMLU-redux, MATH, MBPP, LiveCodeBench, Arena-Hard, AlignBench, MT-Bench, MultiPL-E, and other authoritative evaluations. 405B.

Pictures

▲Qwen2.5-72B model evaluation situation

Qwen2.5 is once again the world’s strongest open source model, contributing to the industry trend of “open source over closed source”.

This is AliCloud following the open source Tongyi Qianqian Qwen2 series in June this year, catching up with the then strongest open source model Llama3-70B, and then launching a series of open source versions.

It has become a familiar rhythm in the AI developer community that every new king that appears is soon surpassed by a new version of Tongyi.

After Qwen2.5 was opened on the evening of September 18, many developers were so excited that they did not sleep, and tried it first.

I’m not sure if I’m going to be able to do that.

▲Developers at home and abroad hotly discuss Qwen2.5

02.The biggest modeling community in the history of the world

The most important thing is to have a good understanding of what is going on in the world and how it works.

The Qwen2.5 open source modeling community is the largest it has ever been.

Zhou Jingren, CTO of AliCloud, announced at the Yunqi conference that the Qwen2.5 series totaled more than 100 open source models on the shelves, fully adapting to the needs of developers and SMEs in various scenarios.

This responds to the calls of many developers, who have been “urging” for a long time in major social media.

▲Developers at home and abroad urge more Qwen2.5

1, language model: from 0.5B to 72B seven sizes, from end-side to industrial-grade scenarios full coverage

Qwen2.5 open-sources seven size language models, including 0.5B, 1.5B, 3B, 7B, 14B, 32B, and 72B, all of which have achieved SOTA results in the corresponding tracks.

Pictures

▲Multi-size Qwen2.5 meets the needs of diverse scenarios

Given its advanced capabilities in Natural Language Processing (NLP) and coding understanding, Alibaba Cloud’s Qwen2.5 is designed to handle a broad range of applications. Here are some specific scenarios where Qwen2.5 can be utilized:

- Enhanced Customer Service: Qwen2.5 can be employed in chatbots for more human-like interactions, effectively answering customer queries and providing solutions.

- Content Creation: The model can assist in generating articles, social media posts, and other written content, saving time for content creators.

- Programming Assistance: With its specialized Qwen2.5-Coder series, it can assist developers by suggesting code snippets, debugging help, and automating certain coding tasks.

- Education and Learning: Qwen2.5 can be used to develop educational tools such as interactive learning platforms that provide explanations, answer student questions, and even grade assignments.

- Translation Services: Given its proficiency in multiple languages, it can provide real-time translation services to bridge the language gap in international communication.

- Research and Development: In research, Qwen2.5 can process large amounts of text data to assist in discovering patterns, summarizing findings, and generating reports.

- Legal Assistance: The model can read legal documents, extract relevant information, and even draft basic legal documents, saving time for legal professionals.

- Healthcare Support: Qwen2.5 can be used to answer common health questions, provide medical information, and assist in managing patient data.

- Marketing and Advertising: It can help create personalized marketing content, ad copy, and social media campaigns that target specific audiences.

- Data Analytics: Qwen2.5 can process and analyze large data sets to provide insights and help make data-driven decisions.

- Automated Reporting: The model can generate reports by processing data and information in a variety of areas, which is particularly useful in financial and business analysis.

- Game Development: Qwen2.5 can assist in creating interactive dialog and narratives for video game characters to enhance player engagement.

- Virtual Assistant: It drives virtual assistants that can manage schedules, set reminders, and provide personalized advice.

- E-commerce: Qwen2.5 can help generate product descriptions, handle customer inquiries, and create personalized shopping experiences.

- Multi-language Support: It can be used in global applications that require multilingual interaction, such as international customer service or content localization.

These versions help developers to balance the model capability and cost, and adapt to a variety of scenarios. For example, 3B is the golden size to adapt to cell phones and other end-side devices, 32B is the most expected by developers to be the “king of cost-effective”, and 72B is the king of the performance of the industrial-grade and scientific research-grade scenarios.

Blog address: https://qwenlm.github.io/zh/blog/qwen2.5-llm/

2、Multi-modal model: visual model understands 20 minutes of video, audio-lingual model supports 8 languages

Qwen2-VL-72B, the highly anticipated large-scale visual language model, is officially open-sourced today.

Qwen2-VL can recognize pictures with different resolutions and aspect ratios, understand long videos of more than 20 minutes, have the ability to regulate the visual intelligences of cell phones and devices, and surpass the GPT-4o level of visual understanding.

▲Large-scale visual language model Qwen2-VL-72B open source

Qwen2-VL-72B became the highest scoring open source visual understanding model in the global authoritative evaluation LMSYS Chatbot Arena Leaderboard.

Qwen2-VL-72B is the highest scoring open source visual understanding model.

Qwen2-Audio large-scale audio language model is an open source model that can understand human voice, music, and natural sound, support voice chat, audio message analysis, and support more than 8 languages and dialects, and it is a global leader in mainstream evaluation index.

Blog address: http://qwenlm.github.io/blog/qwen2-vl/

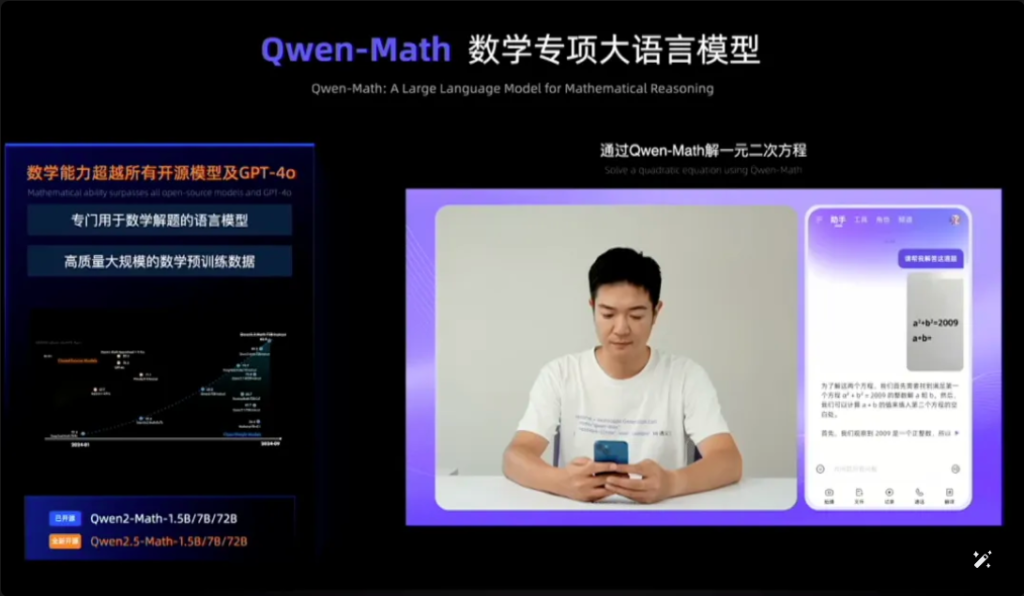

3, special model: the most advanced open source mathematical model debut, catching up with GPT-4o

Qwen2.5-Coder for programming and Qwen2.5-Math for math were also announced as open source at this Cloud Habitat Conference.

Among them, Qwen2.5-Math is the most advanced open source mathematical model series to date, this time open source 1.5B, 7B, 72B three sizes and mathematical reward model Qwen2.5-Math-RM.

▲Qwen2.5-Math open source

The flagship model Qwen2-Math-72B-Instruct outperforms proprietary models such as GPT-4o and Claude 3.5 in math-related downstream tasks.

Qwen2.5-Coder, which was trained on up to 5.5T tokens of programming-related data, was open-sourced on the same day in versions 1.5B and 7B, and will be open-sourced in the future in version 32B.

▲Qwen2.5-Coder open source

Blog address:

https://qwenlm.github.io/zh/blog/qwen2.5-math/

https://qwenlm.github.io/zh/blog/qwen2.5-coder/

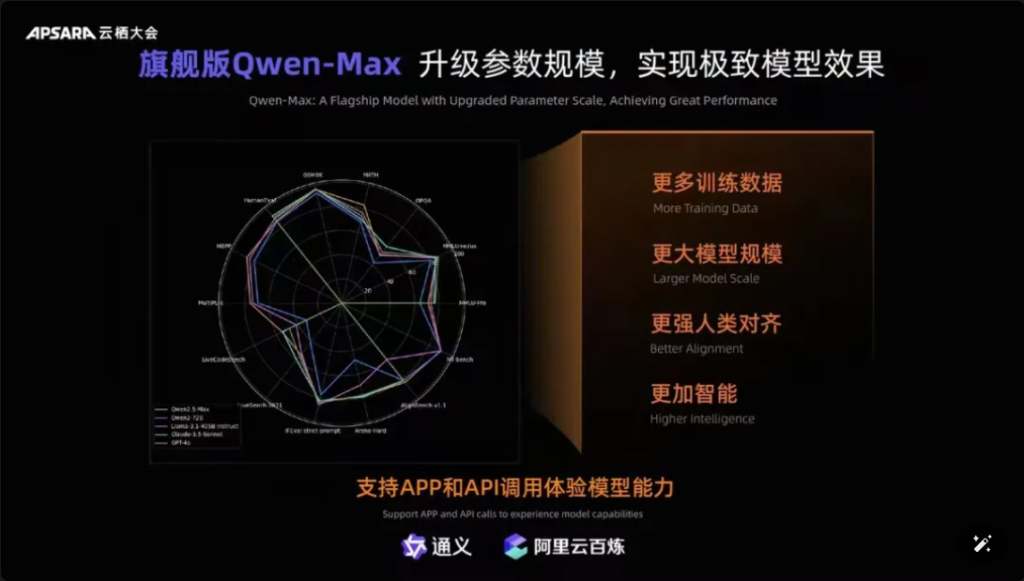

In addition, it is worth mentioning that Qwen-Max, the flagship model of Tongyiqianqian, has been fully upgraded, approaching or even surpassing GPT-4o on more than ten authoritative benchmarks, such as MMLU-Pro, MATH, etc., and is online on the official website of Tongyiqianqian and Tongyi APP. Users can also call Qwen-Max’s APIs through the AliCloud Hundred Refinement Platform.

Pictures

▲Qwen-Max realizes comprehensive upgrade

Since the release of the first generation of the Tongyi Qianqian large model in April 2023, AliCloud has enabled Chinese enterprises to use the large model at low cost, which in turn has driven today’s Qwen2.5 series to be “more and more useful”.

Qwen2.5 series models cover the basic version, command-following version, quantitative version, which is iterated in real-world scenarios.

03.Qwen downloads exceeded 40 million!More than 50,000 “babies” have been born.

After a year and a half of rapid development, Tongyi Qwen has become a world-class model group second only to Llama.

Zhou Jingren announced two sets of the latest data to confirm this:

First, the number of model downloads, as of early September 2024, the cumulative downloads of Tongyi Qianwen’s open source models have exceeded 40 million, which is the result of developers and SMEs voting with their feet;

The second is the number of derived models, as of early September, the total number of Tongyi’s native and derived models exceeded 50,000, second only to Llama.

▲Tongyi Thousand Questions open source model cumulative downloads exceeded 40 million

So far, the “10,000 models with the same source” has become a significant trend in the development of China’s large model industry.

What is the concept? The Chinese open source not only ranks first in the world in terms of performance, but also in terms of ecological expansion. Open source communities, ecological partners, and developers at home and abroad have become the “tap water” of Tongyi Chienqin, which has become the first big model adopted by many enterprises, and also the longest-used big model.

As early as August 2023, Aliyun open-sourced the 7 billion parameter model of Tongyi Qwen to free commercialization, and then this year’s Qwen1.5, Qwen2, Qwen2.5 released one after another, so that developers can quickly use the most advanced model at the same time, to obtain greater control and tuning space, thus becoming the preferred choice of more enterprises.

At the beginning of July, engineers from Hugging Face, the world’s largest open source community, had tweeted to certify that Tongyi is the most rolled-up big model in China. And through AliCloud, Tongyi Big Model has served more than 300,000 customers in various industries. In the just past second quarter of 2024 (corresponding to Q1 of Alibaba’s fiscal year 2025), Aliyun’s AI-related product revenues realized triple-digit growth.

▲Tongyi Big Model serves more than 300,000 customers

What did Aliyun do right?

In my opinion, unlike the foreign big manufacturers Microsoft and ChatGPT strong binding, Amazon AWS convergence of three-party model to do the underlying infrastructure, Aliyun integrated the advantages of the two, at the outset, chose to AI infrastructure services and self-research big model two-handedly.

Focusing on self-research models, Ali Cloud is the only cloud giant in China that is firmly clear about model open source and openness, and has spared no effort to make a huge investment in model breakthroughs, ecological compatibility, developer services, etc., so as to make Tongyi big models step by step into the core circle of the world’s AI competition.

04.Conclusion: All models have the same origin Industry Watershed Moment

Open source models are catching up with or even catching up with closed source models. From Meta’s Llama-405B in July to AliCloud’s Qwen2.5-72B today, the landscape of “all models from the same source” is taking shape. The one and a half year surge of the Tongyi Qianqi large model has allowed many industries and enterprises to realize lower cost AI scale landing, and the industry is entering a new watershed moment.

FAQS

- Q: What are the different model sizes available for Qwen2.5? A: Qwen2.5 offers a range of models from 0.5B to 72B parameters, providing options for various applications and requirements.

- Q: How does Qwen2.5 support programming tasks? A: Qwen2.5 includes a specialized series called Qwen2.5-Coder, designed to enhance code generation, inference, and debugging, with support for up to 128K tokens.

- Q: What is the training data like for Qwen2.5? A: Qwen2.5 is pre-trained on a vast dataset covering up to 18 trillion tokens, ensuring a broad understanding of language.

- Q: Is Qwen2.5 suitable for use in education? A: Yes, Qwen2.5’s capabilities in natural language processing make it suitable for educational tools, including interactive learning platforms.

- Q: How does Qwen2.5 perform in benchmarks compared to other models? A: Qwen2.5 currently ranks third in multimodal benchmarks, outperforming models like Llama3.1 in several areas.

- Q: What languages does Qwen2.5 support? A: Qwen2.5 supports multiple languages, including but not limited to Python, Java, and C++.

- Q: Can Qwen2.5 generate code in multiple programming languages? A: Yes, Qwen2.5-Coder is capable of generating code in various programming languages, catering to the needs of different developers.

- Q: How does Qwen2.5 handle long contexts? A: Qwen2.5 supports context lengths up to 32K, which is beneficial for improving the accuracy of code completion and inference.

- Q: Is Qwen2.5 open source? A: While the open source version of Qwen2.5 has not yet been released, detailed documentation and examples are available on platforms like GitHub.

- Q: What are some real-world application scenarios for Qwen2.5? A: Qwen2.5 can be utilized in customer service chatbots, content creation, programming assistance, education, translation services, legal assistance, healthcare support, marketing, data analytics, automated reporting, game development, virtual assistants, e-commerce, and multilingual support across various industries.