PixelDance: генерация высокодинамичного видео

ByteDance представляет новую модель видео на основе искусственного интеллекта — «Прощай, Сора, твое время прошло».

PixelDance — лучшая модель преобразования текста в видео

Генерация видео Doubao – модель PixelDance и модель Seaweed.

Я расскажу больше о модели Seaweed в следующий раз. На этот раз я хочу поговорить об этой модели Doubao PixelDance, потому что она такая крутая, такая крутая, что я буквально смотрел на нее с благоговением все время. Сложное непрерывное движение персонажей, многокамерная комбинированная видеосъемка и экстремальный контроль камеры.

Комбинированное видео с нескольких камер Возможность создания видео с нескольких камер с единым стилем, сценой и персонажами из одного изображения + подсказка — это то, что я видел только в промо-ролике Соры.

Экстремальный контроль камеры. Моделирование Doubao PixelDance — самое возмутительное и потрясающее, что я когда-либо видел.

Теперь управление видеообъективом на основе ИИ по-прежнему в основном сосредоточено на комбинации двух функций камеры и кисти движения, но, честно говоря, верхний предел действительно ограничен, много больших объективов и зумов просто невозможно реализовать.

Персонажи могут выполнять непрерывные действия. Раньше видеоролики с ИИ имели очень важный момент: они выглядели как анимация PPT.

PixelDance ШоуКейсинг Видео

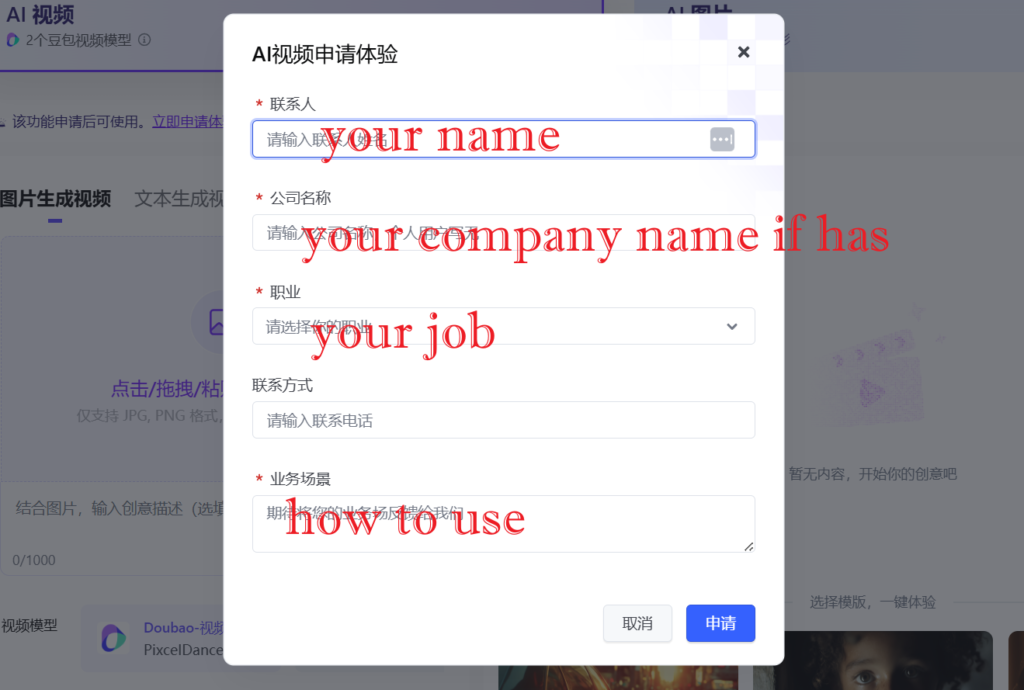

Как подать заявку на PixelDance СЕЙЧАС?

https://console.volcengine.com/ark/region:ark+cn-beijing/experience/vision?type=GenVideo

Сначала зарегистрируйте свой аккаунт:

Войдите в систему с помощью мобильного телефона.

Подать заявку на доступ можно здесь:

Теперь вы сделали это, пожалуйста, ждите ответа.